An automated way for creators to engage with their users by automating the creation of linkedin posts.

Project Overview

Project Overview

Can you provide a brief overview of the project you've been working on in the robotics lab?

The idea behind this project was to scrape the web for news tech and be able to convert that text into post form to be uploaded through the Linkedin API as a new post.

Purpose of the project

Purpose of the project

What inspired or motivated you to choose this particular project?

With a lot of news coming out in the field of robotics and AI it is hard to keep up with the latest and stay informed on new research and progress. Our goal is to provide our members and those that follow us on social media with informative summaries while not overwhelming anyone with unnecessary updates and irrelevant news.

Technical Details

Technical Details

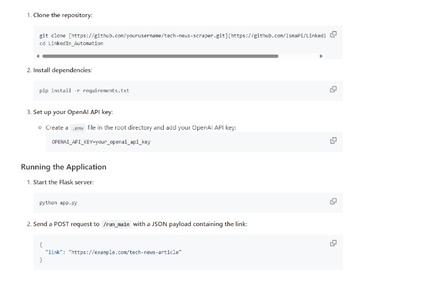

Could you explain the technical aspects of your project? What software, tools do you use?

We used Python for scripting and automation, along with various scraping technologies to gather data from the web. To manage proxies and avoid IP bans, we implemented proxy scraping techniques. For automation, we utilized tools similar to Zapier and employed Linux scheduling software such as cron to schedule tasks. Additionally, we leveraged the OpenAI API for natural language processing and are currently experimenting with self-hosted Large Language Models (LLMs) to enhance our text generation capabilities.

Challenges and Solutions

Challenges and Solutions

Were there any significant challenges you encountered during the project, and how did you overcome them?Can you share a specific problem-solving moment that stands out in your project?

One significant challenge we encountered during the project was managing the risk of IP bans while scraping data from various websites. To overcome this, we implemented proxy scraping techniques, which allowed us to distribute our requests across multiple IP addresses, thereby reducing the likelihood of getting banned.

A specific problem-solving moment that stands out was when we faced issues with the consistency and quality of the text generated for LinkedIn posts. Initially, we relied solely on the OpenAI API for natural language processing, but we found that the generated content sometimes lacked coherence or relevance. To address this, we started experimenting with self-hosted Large Language Models (LLMs). This allowed us to fine-tune the models according to our specific needs, resulting in more accurate and contextually appropriate posts. By integrating these self-hosted LLMs with our existing automation tools and scheduling software like cron, we were able to significantly improve the quality and reliability of our automated LinkedIn posts. This shift to self hosted is also a promising method of cutting the cost of relying on 3rd party LLMs. We are still facing some issues with hallucinations and will not deploy our solution until we are 100% certain it will result in no mis-information or discrepencies. It is important to note that our program respects all restrictions provided by websites which prohibit scraping given both by the robots.txt details and terms of use.

Collaboration and Teamwork

Collaboration and Teamwork

Did you collaborate with other students or team members on this project? How did teamwork contribute to the success or progress of your project?

Teamwork was one of the most pivotal parts throughout this project, which allowed the project to become a reality. The main reason for this is the wide range of abilities of every person in the group, which made it essential to work together to leverage each individual's capabilities. In the end, we managed to organize ourselves and work together, ensuring that everything fit tightly together and everything worked.

Learning and Takeaways

Learning and Takeaways

What key lessons or skills have you gained from working on this project? How has this project influenced your understanding or interest in robotics?

One of the most important takeaways from this project was integrating all the different technologies with each other. To be able to connect the script to our self-hosted models, we had to implement several measures, such as using Docker to ensure that the program could be run in a stable manner independently of the machine and programs. Furthermore, this project was an invaluable learning experience for the use of web scraping tools and API usage. This project has not only been useful to enhance our technical skills, but also showed us how workflows and tasks may be automated using the latest innovations in AI.

Future Development

Future Development

Do you have plans for further development or improvement of your project in the future? Are there specific areas or features you'd like to explore or enhance in your project?

In the future, it could be interesting to try scaling this idea to other social media and club functions, such as emails and newsletters.